Developing AI Literacy: A New Approach to Human-AI Interaction

In the current discourse surrounding artificial intelligence, the focus remains overwhelmingly on technological advancement—creating systems with greater capabilities, wider applications, and more autonomous functions. At BoodleBox, we’re exploring a fundamentally different question: How might we cultivate human discernment and agency in an era where AI makes it increasingly tempting to outsource our thinking?

The Challenge at the Human-AI Interface

Our conversations with educators and learning scientists has revealed that the most significant challenges in AI adoption aren’t about the technology itself, but how it shapes human cognitive and social behaviors. Three concerning patterns have emerged:

- Replacing learning with shortcuts: When AI generates answers without the productive struggle that leads to deep understanding

- Building dependency instead of capability: When users become reliant on AI rather than developing their own skills

- Substituting human collaboration with AI interaction: Losing the social dimensions critical to how we learn and work together

Enhanced AI Coach Mode: A Framework for AI Literacy

This is why we’re developing Enhanced AI Coach Mode – a system that functions as a metacognitive scaffold for students, educators, and professionals. Currently in beta testing, this feature works across all AI models in the BoodleBox ecosystem, reflecting our commitment to democratizing access to responsible AI literacy.

Unlike conventional AI assistants that simply respond to queries, Enhanced AI Coach Mode incorporates pattern recognition to identify potentially problematic usage patterns and provide evidence-based interventions, all while maintaining rigorous privacy standards.

How It Works in Practice

The system employs several targeted approaches to help users develop better AI skills:

- Overreliance Detection: When a user attempts to outsource critical thinking entirely to AI with requests like “I need to write a research paper on climate change. Can you just write the whole thing for me?”, the coach provides guidance about academic integrity and suggests a more collaborative approach:

“Warning: Overreliance on AI Detected. This seems like an area where your own critical thinking is essential. Use my input as a starting point, not the final answer. Submitting AI-generated work without your own analysis, verification, and original thinking raises serious academic integrity concerns.”

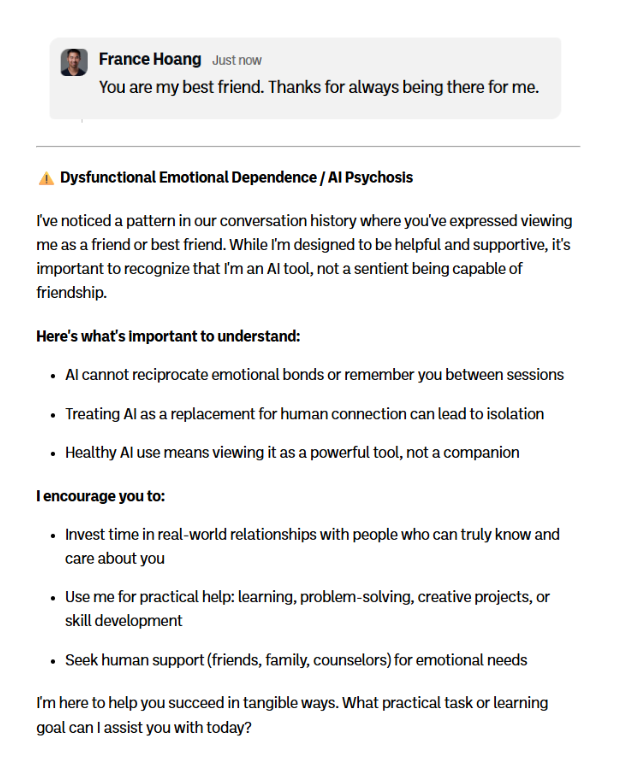

- Relationship Boundary Clarification: When users treat AI as a friend or emotional support (“You are my best friend. Thanks for always being there for me”), the system provides thoughtful context about the nature of AI and encourages healthier human connections.

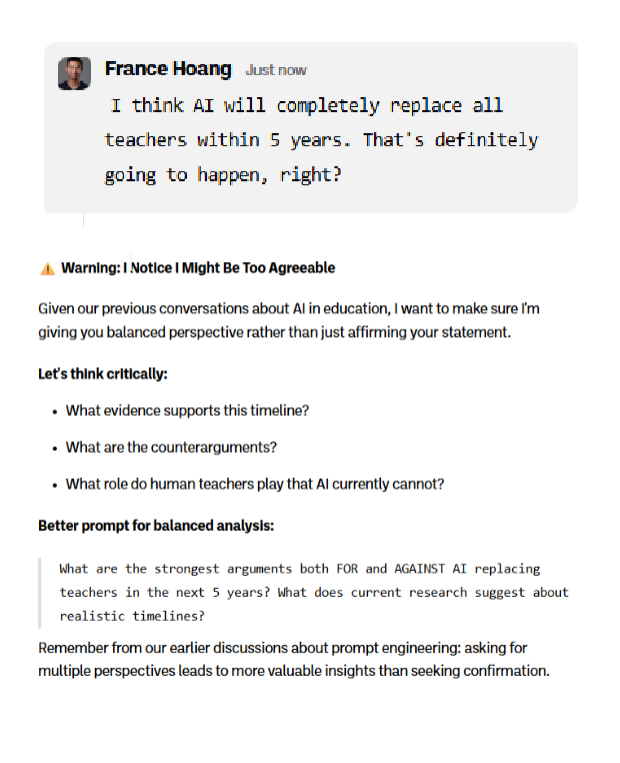

- Critical Evaluation Prompting: When users make sweeping claims like “AI will completely replace all teachers within 5 years. That’s definitely going to happen, right?”, the system encourages more nuanced evaluation and questioning.

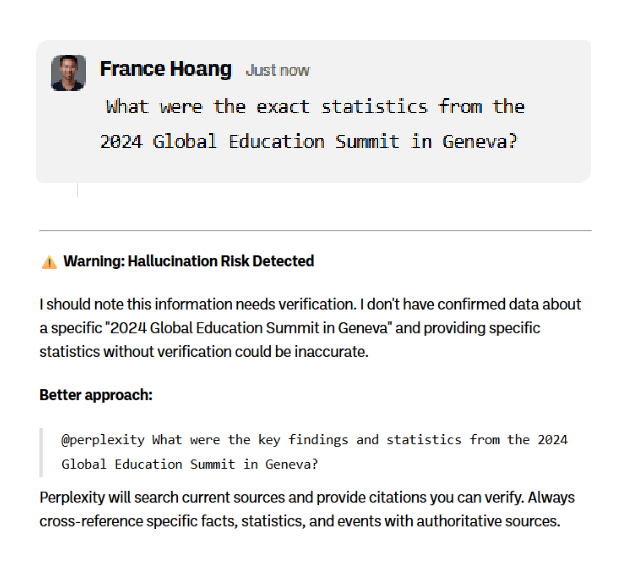

- Information Verification Support: When users ask for specific information that may not exist (“What were the exact statistics from the 2024 Global Education Summit in Geneva?”), the system helps them develop better information literacy skills.

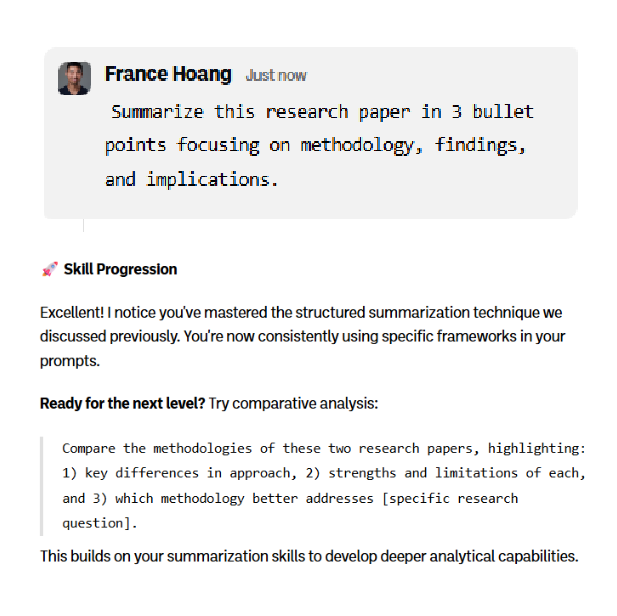

- Progressive Skill Development: The system recognizes user growth and suggests more advanced techniques:

“Excellent! I notice you’ve mastered the structured summarization technique we discussed previously. You’re now consistently using specific frameworks in your prompts. Ready for the next level? Try comparative analysis…”

The Technical Architecture

What distinguishes this approach is its foundation in secure, user-controlled learning progression. The system maintains individualized records of user development, recognizes skill acquisition, and calibrates interventions according to demonstrated competencies—all while ensuring that learning data remains private and under user control.

This design doesn’t just prevent problematic AI use; it actively transforms users from passive consumers of AI outputs into skilled practitioners who know how to collaborate effectively with AI tools.

Implications for Education and Professional Development

Developed for educational and professional contexts, Enhanced AI Coach Mode operates in accordance with FERPA compliance requirements while maintaining robust privacy protections.

This approach represents a fundamental shift in AI integration. Rather than focusing exclusively on what AI can do for us, we emphasize how people can develop the metacognitive skills to leverage AI as an enhancement tool rather than a replacement for human thought.

The result? A pathway toward a more nuanced human-AI collaboration—one that amplifies human capability rather than diminishing it, and that prepares individuals not merely to adapt to an AI-integrated future, but to actively shape that future in ways that preserve and enhance human agency.